What is a LSRL?

Linear regression is a statistical method used to model the linear relationship between a dependent variable (also known as the response variable) and one or more independent variables (also known as explanatory variables). The goal of linear regression is to find the line of best fit that describes the relationship between the dependent and independent variables.

The least squares regression line is a specific type of linear regression model that is used to minimize the sum of the squared differences between the observed values of the dependent variable and the predicted values of the dependent variable. This line is also known as the "line of best fit" because it is the line that best fits the data points on the scatterplot.

In simple linear regression, there is only one independent variable, and the line of best fit is a straight line that can be represented by the equation ŷ = a + bx, where ŷ is the predicted value of the dependent variable, x is the value of the independent variable, a is the y-intercept (the value of ŷ when x = 0), and b is the slope (the change in ŷ for a given change in x).

Once we have calculated the slope and y-intercept, we can plug these values into the equation ŷ = a + bx to find the equation for the least squares regression line.

Again, it's important to recognize that our ŷ represents our predicted response variable value, while x represents our explanatory value variable. Since x is given in a data set, it is not necessarily predicted, but our y-value is always predicted from a least squares regression line.

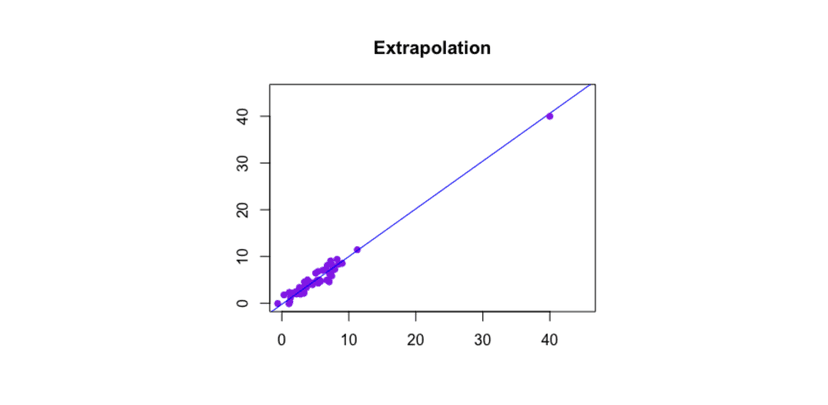

Extrapolation

When you have a linear regression equation, you can use it to make predictions about the value of the response variable for a given value of the explanatory variable. This is called interpolation, and it is generally more accurate if you stay within the range of values of the explanatory variable that are present in the data set.

Extrapolation is the process of using a statistical model to make predictions about values of a response variable outside the range of the data. Extrapolation is generally less accurate than interpolation because it relies on assumptions about the shape of the relationship between the variables that may not hold true beyond the range of the data. The farther outside the range of the data you go, the less reliable the predictions are likely to be.

It's important to be cautious when making extrapolations, as they can be prone to errors and can sometimes produce unrealistic or misleading results. It's always a good idea to be aware of the limitations of your model and to be mindful of the risks of extrapolation when making predictions based on your model.

Example

In a recent model built using data for 19-24 year olds, a least squares regression line is developed that says that an individual's comfort level with technology (on a scale of 1-10) can be predicted using the least squares regression line: ŷ=0.32x+0.67, where ŷ is the predicted comfort level and x represents one's age.

Predict what the comfort level would be of a 45 year old and why this response does not make sense.

ŷ = 0.32 (45) + 0.67

ŷ= 15.07 comfort level

This answer does not make sense because we would expect our predicted comfort levels to be between 1 and 10. 15.07 does not make sense. The reason why we have this response is because we were using a data set intended for 19-24 year olds to make inference about a 45 year old. We were extrapolating our data to include someone outside our data set, which is not a good idea.

Practice Problem

A study was conducted to examine the relationship between hours of study per week and final exam scores. A scatterplot was generated to visualize the results of the study for a sample of 25 students.

Based on the scatterplot, a least squares regression line was calculated to be ŷ = 42.3 - 0.5x, where ŷ is the predicted final exam score and x is the number of hours of study per week.

Use this equation to predict the final exam score for a student who studies for 15 hours per week.

Answer

To solve the problem, we need to use the equation for the least squares regression line, which is ŷ = 42.3 - 0.5x, where ŷ is the predicted final exam score and x is the number of hours of study per week.

We are asked to predict the final exam score for a student who studies for 15 hours per week, so we can plug this value into the equation to calculate the predicted value of ŷ:

ŷ = 42.3 - 0.5 * 15

= 42.3 - 7.5

= 34.8

Interpretation in Context: Therefore, the predicted final exam score for a student who studies for 15 hours per week is 34.8

🎥 Watch: AP Stats - Least Squares Regression Lines

Vocabulary

The following words are mentioned explicitly in the College Board Course and Exam Description for this topic.

| Term | Definition |

|---|---|

| explanatory variable | A variable whose values are used to explain or predict corresponding values for the response variable. |

| extrapolation | Predicting a response value using a value for the explanatory variable that is beyond the range of x-values used to create the regression model, resulting in less reliable predictions. |

| least-squares regression line | A linear model that minimizes the sum of squared residuals to find the best-fitting line through a set of data points. |

| linear regression model | An equation that uses an explanatory variable to predict a response variable in a linear relationship. |

| predicted value | The estimated response value obtained from a regression model, denoted as ŷ. |

| response variable | A variable whose values are being explained or predicted based on the explanatory variable. |

| slope | The value b in the regression equation ŷ = a + bx, representing the rate of change in the predicted response for each unit increase in the explanatory variable. |

| y-intercept | The value a in the regression equation ŷ = a + bx, representing the predicted response value when the explanatory variable equals zero. |

Frequently Asked Questions

How do I calculate a predicted y value using linear regression?

Use the regression equation ŷ = a + b x. Plug the given x (explanatory value) into that equation where a is the y-intercept and b is the slope (from your least-squares regression line). Example: if your line is ŷ = 12 + (−0.7)x and x = 20, then ŷ = 12 + (−0.7)(20) = 12 − 14 = −2. Be sure a and b come from your fitted least-squares line (often found on your calculator or output). That ŷ is the predicted response for that x. Remember extrapolation: predictions for x far outside the original x-range are unreliable. On the AP exam you may use a graphing calculator to get a and b; practice this skill (and residual interpretation) in Topic 2.6 study guide (https://library.fiveable.me/ap-statistics/unit-2/linear-regression-models/study-guide/PSt5cfDuvB5nu60DHulR) and try extra problems (https://library.fiveable.me/practice/ap-statistics).

What's the formula for ŷ in linear regression and what do all the letters mean?

The regression formula is ŷ = a + b x. Here’s what each symbol means (AP terms from the CED): - ŷ (y-hat) = predicted response value (the fitted value). - a = y-intercept of the least-squares regression line (predicted y when x = 0). - b = slope of the regression line; it’s the change in predicted y for a one-unit increase in the explanatory variable x. (On the AP formula sheet b = r(sy/sx).) - x = explanatory (predictor) variable (what you use to predict y). - y = actual response variable (what you’re predicting). - residual = y − ŷ (distance from the observed point to the line). Quick exam tips: use ŷ = a + bx to calculate predicted values (DAT-1.D in the CED), don’t extrapolate beyond the x-range used to fit the line (predictions become less reliable), and interpret slope and intercept in context. For more review see the Topic 2.6 study guide (https://library.fiveable.me/ap-statistics/unit-2/linear-regression-models/study-guide/PSt5cfDuvB5nu60DHulR) and get extra practice (https://library.fiveable.me/practice/ap-statistics).

When do I use ŷ = a + bx vs just y = mx + b?

Use ŷ = a + bx when you’re talking about a fitted regression model and making predictions. The hat (ŷ) shows this is a predicted (fitted) value of the response variable based on the least-squares line; a is the regression y-intercept and b is the slope (CED DAT-1.D.2). Use y = mx + b (or y = bx + a) when writing a generic straight-line equation in algebra or describing a theoretical/ideal line—it’s not explicitly calling out “predicted” values from data. Quick checklist: - If you computed the line from data (computer/calculator output, b = r·(sy/sx), etc.) and want to predict a response for an x, write ŷ = a + bx and say you’re predicting the response. - If you’re just discussing slope/intercept algebraically, y = mx + b is fine. - Remember: predictions far outside your x range are extrapolation and less reliable (CED DAT-1.D.3). For more AP-aligned review, see the Topic 2.6 study guide (https://library.fiveable.me/ap-statistics/unit-2/linear-regression-models/study-guide/PSt5cfDuvB5nu60DHulR) and practice problems (https://library.fiveable.me/practice/ap-statistics).

What's the difference between y and ŷ in regression problems?

y is the actual observed response value from your data; ŷ (y-hat) is the value the regression model predicts for a given x. The least-squares regression line is ŷ = a + bx (CED DAT-1.D.2): plug an explanatory value x into that equation to get ŷ. The difference y − ŷ is the residual—how far the model missed that particular observation. Residuals tell you model fit (small residual = good fit); check a residual plot for patterns or nonlinearity. Remember ŷ is only an estimate based on the sample used to build the line, and predictions for x-values far outside that sample are extrapolations and less reliable (CED DAT-1.D.3). For quick review and practice problems on this topic, see the Fiveable study guide (https://library.fiveable.me/ap-statistics/unit-2/linear-regression-models/study-guide/PSt5cfDuvB5nu60DHulR) and try practice questions (https://library.fiveable.me/practice/ap-statistics).

How do I find the slope and y-intercept for a linear regression model?

Use the least-squares regression formulas. The regression line is ŷ = a + bx, where b (slope) = r · (s_y / s_x) and a (y-intercept) = ȳ − b·x̄. So: - Find x̄ and ȳ (the sample means) and s_x and s_y (sample SDs). - Find r, the sample correlation. - Compute b = r * (s_y / s_x). This tells how much ŷ changes (on average) for a one-unit increase in x. - Compute a = ȳ − b·x̄ so the line goes through the point (x̄, ȳ). On the AP exam you can use your graphing calculator to get the LSRL directly (stat → linreg), which gives a and b and is the method used in many problems (bring a calculator per CED). Watch out for extrapolation: predictions for x outside the original x-range are less reliable (DAT-1.D.3). For a focused review see the Topic 2.6 study guide (https://library.fiveable.me/ap-statistics/unit-2/linear-regression-models/study-guide/PSt5cfDuvB5nu60DHulR) and for extra practice try the AP problems (https://library.fiveable.me/practice/ap-statistics).

I don't understand extrapolation - can someone explain when predictions become unreliable?

Extrapolation means using your regression line ŷ = a + bx to predict y for an x-value that’s outside the range of x-values used to fit the line (CED: DAT-1.D.3). It becomes unreliable because the linear relationship you estimated might not hold beyond your data, there could be different behavior (nonlinearity), or you don’t know about forces/constraints outside your sample. For example, if your x-values were from 10 to 50, predicting at x = 70 is extrapolation—the further you go past 50, the less trustworthy ŷ is. Extrapolated predictions often have much larger uncertainty (prediction intervals get huge) and can be biased by influential points or model misspecification. On the AP exam, always note whether a prediction is interpolation (inside the x-range) or extrapolation and explain why extrapolated ŷ is less reliable (use terms: residual, influential point, nonlinearity). For a refresher on regression ideas, see the Topic 2.6 study guide (https://library.fiveable.me/ap-statistics/unit-2/linear-regression-models/study-guide/PSt5cfDuvB5nu60DHulR). For broader review and practice, check Unit 2 (https://library.fiveable.me/ap-statistics/unit-2) and AP practice problems (https://library.fiveable.me/practice/ap-statistics).

What does it mean when a problem asks me to "predict the response value" using regression?

When a problem asks you to “predict the response value” with regression, it means find the fitted y (ŷ) for a given explanatory x using the least-squares line. Plug the x into the regression equation ŷ = a + b x, where a is the y-intercept and b is the slope. That ŷ is your predicted response (a point estimate) for the response variable in context. Quick notes for AP Stats: this is exactly DAT-1.D—you may be asked to compute ŷ and interpret it in context. Mention whether you’re interpolating (x inside the data range; prediction more reliable) or extrapolating (x outside the original x-values; prediction less reliable). If asked, comment on reasonableness using r, R², residuals, or a residual plot. For a short refresher and practice on this topic, check the Topic 2.6 study guide (https://library.fiveable.me/ap-statistics/unit-2/linear-regression-models/study-guide/PSt5cfDuvB5nu60DHulR) and try more practice problems at (https://library.fiveable.me/practice/ap-statistics).

How do I know if I'm extrapolating or not when making predictions?

Extrapolation means using your regression line to predict ŷ for an x-value outside the x-range used to fit the line. So first check the scatterplot or your data: find the minimum and maximum x used to compute the LSRL. If your prediction x is less than min(x) or greater than max(x), you’re extrapolating. Extrapolated predictions are less reliable—the further outside the original x-interval, the more uncertain the estimate (CED DAT-1.D.3). On the AP exam, avoid strong conclusions from extrapolated ŷ; show caution in your interpretation and note that prediction intervals don’t fix the issue. Always plot the point on the scatterplot to see whether it’s interpolation or extrapolation and mention it in your answer. For a quick review of formulas, conditions, and examples on this topic, see the Topic 2.6 study guide (https://library.fiveable.me/ap-statistics/unit-2/linear-regression-models/study-guide/PSt5cfDuvB5nu60DHulR) and Unit 2 overview (https://library.fiveable.me/ap-statistics/unit-2). For extra practice, try problems at (https://library.fiveable.me/practice/ap-statistics).

Can someone walk me through the steps of using a regression equation to make predictions?

Quick steps you can follow every time you use a regression equation to predict: 1. Identify the regression equation ŷ = a + bx (CED DAT-1.D). Make sure you know which variable is x (explanatory) and y (response). 2. Plug in the x value into ŷ = a + bx and calculate ŷ. Use your calculator for accuracy (AP exam allows graphing calculators). 3. Give the predicted value with units and context (e.g., “Predicted test score = 82 points”). Always state it as a predicted response ŷ. 4. Check where x falls relative to the original data—if it’s outside the x-range, note this is extrapolation (less reliable; DAT-1.D.3). 5. Consider the model’s strength: report R² or r to say how much of the variation is explained, and look at residuals to see if the prediction seems reasonable. 6. If needed on the exam, justify assumptions (linearity, no strong outliers/influential points) and mention uncertainty (residual or prediction interval if available). For a quick refresher, see the Topic 2.6 study guide (https://library.fiveable.me/ap-statistics/unit-2/linear-regression-models/study-guide/PSt5cfDuvB5nu60DHulR). For more practice, Fiveable has lots of problems (https://library.fiveable.me/practice/ap-statistics).

Why is extrapolation bad and how far is too far to predict?

Extrapolation is risky because the least-squares line was fit only to the observed x-values, so ŷ = a + bx is only justified where you have data. Outside the observed x-range (below the sample minimum or above the sample maximum) the relationship may change, so the prediction is less reliable the farther you go. There’s no fixed “how far” rule—as a rule of thumb, don’t predict outside the x-range; if you must, keep it close to the nearest observed x and treat the result as speculative. Always check the scatterplot and residuals for linearity and influential points, and ask whether the linear pattern makes sense in context. Prediction intervals also get wider as you move away from the mean of x, reflecting increased uncertainty. For AP practice, this is covered under DAT-1.D (predicting ŷ and the caution about extrapolation); see the Topic 2.6 study guide (https://library.fiveable.me/ap-statistics/unit-2/linear-regression-models/study-guide/PSt5cfDuvB5nu60DHulR) and try practice problems (https://library.fiveable.me/practice/ap-statistics) to build intuition.

What's the difference between the explanatory variable and response variable in regression?

The explanatory variable (x) is the one you use to predict—it's thought to explain changes in another variable. The response variable (y) is the outcome you want to predict or explain. In simple linear regression the model is ŷ = a + bx (CED DAT-1.D.2): x is plugged into the equation to get the predicted response ŷ. The slope b tells how much the predicted response changes, on average, for a one-unit increase in the explanatory variable; a is the predicted y when x = 0. Residuals = observed y − ŷ show how well the explanatory variable predicts the response. Don’t extrapolate: predictions for x values outside the data range are less reliable (CED DAT-1.D.3). For more practice and reminders on wording for the AP exam, see the Topic 2.6 study guide (https://library.fiveable.me/ap-statistics/unit-2/linear-regression-models/study-guide/PSt5cfDuvB5nu60DHulR) and plenty of practice problems (https://library.fiveable.me/practice/ap-statistics).

How do I plug numbers into ŷ = a + bx correctly?

Think of ŷ = a + bx as: start at the y-intercept a, then move b times the x you plug in. Steps: 1. Check units for a and b (they match y). b is “change in y per 1 unit of x.” a is predicted y when x = 0. 2. Substitute the given x into the equation. Example: if a = 10 and b = 2.5 and x = 4, ŷ = 10 + 2.5(4) = 10 + 10 = 20. 3. Report ŷ with context and correct units: predicted (response) = 20 (units). 4. Note: only interpolate inside the x-range used to fit the line; outside that range your prediction is extrapolation and less reliable (CED DAT-1.D.3). For more practice and AP-style problems on linear regression, check the Topic 2.6 study guide (https://library.fiveable.me/ap-statistics/unit-2/linear-regression-models/study-guide/PSt5cfDuvB5nu60DHulR) and practice sets (https://library.fiveable.me/practice/ap-statistics).

I keep confusing which variable is x and which is y in regression word problems - help?

Short rule: x = the explanatory (predictor) variable, y = the response (outcome) variable. The regression equation ŷ = a + bx uses x to predict y (CED DAT-1.D). Quick ways to decide in word problems: - If the sentence uses words like predict, estimate, effect of, or given, the thing you’re using to predict is x and the thing you want is y. Example: “predict a person’s weight from their height” → x = height, y = weight. - Use time order: earlier/controlled measurements are usually x; later/measured outcomes are y. - In experiments, the thing you manipulate is x; the measured result is y. - Check units in the equation: a is in y’s units; b multiplies x’s units. - On scatterplots, horizontal axis = x, vertical = y. If wording’s ambiguous, pick one, state your choice and justify it (exam graders want clear reasoning). For extra practice and examples, see the Topic 2.6 study guide (https://library.fiveable.me/ap-statistics/unit-2/linear-regression-models/study-guide/PSt5cfDuvB5nu60DHulR) and Unit 2 review (https://library.fiveable.me/ap-statistics/unit-2).

When the problem gives me a regression equation, how do I use it to predict values?

If you’re given a regression equation, use it exactly like the CED says: ŷ = a + bx. a is the y-intercept, b is the slope, x is your explanatory value. Plug in the x you want and compute ŷ (remember units—ŷ has the response units). Example: if ŷ = 10 + 2.5x and x = 4, then ŷ = 10 + 2.5(4) = 20. Always state the answer in context (e.g., “predicted score = 20 points”). Check whether x is inside the original x-range (interpolation)—predictions are less reliable if you extrapolate beyond that range. Also be ready to compute and interpret the residual (observed − ŷ) and mention the strength/fit (r or R²) if given. For AP you may use a graphing calculator for regression and predictions (allowed on the exam). For a quick refresher on formulas and examples, see the Topic 2.6 study guide (https://library.fiveable.me/ap-statistics/unit-2/linear-regression-models/study-guide/PSt5cfDuvB5nu60DHulR) and try practice problems at (https://library.fiveable.me/practice/ap-statistics).

What does "beyond the interval of x-values" mean for extrapolation?

“Beyond the interval of x-values” just means using the regression line to predict at an x that falls outside the smallest and largest x in your data. For example, if all your observed x’s are between 10 and 50, plugging x = 60 into ŷ = a + bx is extrapolation. The CED (DAT-1.D.3) warns that extrapolated predictions are less reliable—the further you go past the observed x-range, the more uncertain the prediction, because the linear pattern may change outside your sample. On the AP exam you should label such predictions as extrapolation and avoid trusting them without strong external justification. For quick review of wording and examples in Topic 2.6, see the Fiveable study guide (https://library.fiveable.me/ap-statistics/unit-2/linear-regression-models/study-guide/PSt5cfDuvB5nu60DHulR). For extra practice distinguishing interpolation vs. extrapolation, try problems on the AP practice page (https://library.fiveable.me/practice/ap-statistics).